Leslie Ungerleider: Reading Faces

How the Brain Recognizes Faces and Their Expressions of Emotions

“Face recognition is a remarkable ability, given the tens of thousands of different faces one can recognize, automatically and effortlessly, sometimes even many years after a single encounter,” said National Institute of Mental Health (NIMH) neuroscientist Leslie Ungerleider. Her research has helped to identify how different regions of the brain work together to identify faces as well as to read facial expressions of emotion.

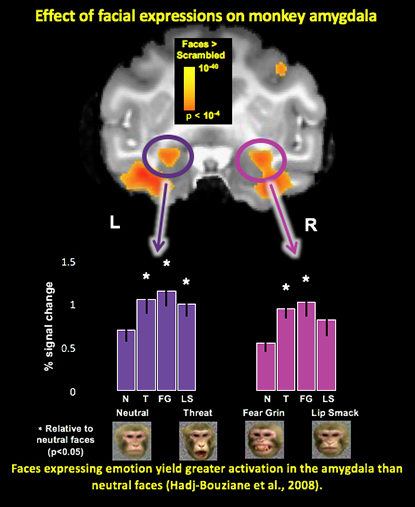

This image is a composite of two in the the cited article: F. Hadj-Bouziane, A.H. Bell, T.A. Knusten, L.G. Ungerleider, and R.B. Tootell, (2008). “Perception of emotional expressions is independent of face selectivity in monkey inferior temporal cortex,” Proceedings of the National Academy of Sciences, 105:5591-5596, 2008.

The ability to recognize emotions from facial expressions is essential for effective interpersonal interactions. It enables us to judge the intent, mood, and focus of attention of others so we can respond appropriately in social situations. But some people suffer from an inability to recognize faces, and others can’t interpret facial expressions. Ungerleider’s work is shedding light on how the brain networks operate under both normal and impaired conditions.

An NIH distinguished investigator who came to NIMH in 1975, Ungerleider has long been devoted to establishing the links between neural structure and cognitive function, especially in the visual system. Her early work with macaque monkeys (Macaca mulatta) involved mapping the visual cortex and identifying brain functional systems. In the 1980s, she and NIMH colleague Mortimer Mishkin formulated the theory of “two cortical visual systems,” which proposes that one visual system specializes in object recognition and another in visuospatial perception. Later, the development of imaging techniques such as functional magnetic resonance imaging (fMRI) and positron emission tomography made it possible for Ungerleider and others to define brain regions that are important for cognition.

Ungerleider described her current research at an Anita Roberts Lecture that took place on May 10, 2016, in Wilson Hall (Building One). She explained how neuroimaging of the human and nonhuman primate brain has revealed an intricate network of face-selective regions. Core and extended regions each play a role in recognizing specific facial features and interpreting facial expressions.

The core regions are made up of the occipital face area (OFA), which recognizes facial features; the fusiform face area (FFA), which pays attention to size, position, and spatial scale; and the superior temporal sulcus (STS), which tracks movements of the eyes, gaze, lips, and facial expressions. The extended regions include the anterior temporal cortex (ATC), which is responsible for facial identity; the prefrontal cortex (PFC), which maintains a working memory of faces; and the amygdala, which handles emotional processing.

The network is out of whack in people with congenital prosopagnosia (CP), an inherited condition affecting about two percent of the population in which individuals are impaired in their ability to recognize familiar faces. Ungerleider and outside colleagues figured out why: There is reduced functional connectivity between the core and extended face-processing regions, resulting in a functional disconnection of the anterior face region from the OFA and FFA, the posterior face regions that would normally supply it with face information.

Ungerleider is also studying how the amygdala, located in the medial temporal lobe of the brain, controls and modulates human emotions. She and her postdoc Fadila Hadj-Bouziane, together with NIMH colleague Elisabeth A. Murray (Laboratory of Neuropsychology), discovered that both the amygdala and ventral temporal cortex help people distinguish facial expressions of fear from neutral expressions. This ability is compromised in Urbach-Wiethe disease, a rare genetic disorder in which there is a general thickening of the skin and mucous membranes and, in some cases, a calcification of brain tissue that can lead to bilateral damage to the amygdala and result in epilepsy and neuropsychiatric abnormalities. Studying this disease allows researchers to learn about other diseases, such as autism, that exhibit similar neurological symptoms.

In another study in monkeys, Ungerleider and her postdoc Ning Liu are examining how the neurosecretory hormone oxytocin affects how the amygdala modulates emotional facial expressions. The hormone can influence social behavior such as building trust and has been proposed as a treatment for people with autism because it can increase their social skills.

Leslie Ungerleider

The researchers found that intranasal administration of oxytocin reduced the ability of the amygdala, the PFC, and the temporal cortical areas to recognize fearful and threatening facial expressions. Ungerleider plans to explore whether this modulation is mediated by oxytocin receptors in the amygdala.

Staff scientist Shruti Japee and postbac Savannah Lokey in Ungerleider’s lab recently worked with Clinical Center patients who have Moebius syndrome (MoS), a rare congenital neurological disorder that affects cranial nerves 6 and 7 and causes facial paralysis. The researchers found that not only did people with MoS lack the ability to show their emotions through their own facial expressions, but they also couldn’t detect emotions expressed by others. It therefore appears that the ability to detect emotions in other individuals depends on the feedback one get from one’s own facial muscles when expressing emotion.

Ungerleider intends to continue this line of work and is conducting parallel studies in humans and monkeys. In the human studies, she aims to understand how emotion detection is impaired in individuals with MoS: She will use fMRI to scan their brains while they are performing emotion-detection tasks. In the monkey studies, she will combine electrical stimulation with fMRI to map the neural circuits mediating the detection of emotional expression. Her research addresses crucial issues that are relevant to psychiatric disorders, many of which are characterized by impaired social and emotional perception and behavior.

Anita B. Roberts, who spent 30 years at the National Cancer Institute before her death in 2006, was known for her groundbreaking work on transforming growth factor–beta. The “Anita B. Roberts Lecture Series: Distinguished Women Scientists at NIH” honors the research contributions she and other female scientists have made. Leslie Ungerleider, who gave the May 10 lecture, has more than 40 years of research experience in the field of cognitive neuroscience with a special focus on visual perception and attention. She is a member of the National Academy of Sciences, the American Academy of Arts and Sciences, and the National Academy of Medicine. To watch a videocast of her Anita Roberts lecture (“Functional Architecture of Face Processing in the Primate Brain”), go to http://videocast.nih.gov/launch.asp?19674.

This page was last updated on Wednesday, April 13, 2022