News You Can Use: NHLBI Chat

NHLBI Pilots Internal Generative AI Tooling

BY MEAGAN MARKS, NIAAA

Generative artificial intelligence (AI) tools based on large language models (LLMs) such as OpenAI’s ChatGPT, Google’s Gemini, or Microsoft’s Copilot have received both cheers and jeers for their ability to lighten workloads and increase efficiency in the workplace. But accompanying this new wave of generic public-access tooling comes an undercurrent of trustworthiness concerns and ethical considerations. These concerns arise because the data entered into these public LLMs are not fully controlled, and caution should be exercised when assessing its output.

For these reasons, the NIH Office of the Chief Information Officer recommends no sensitive information be shared with the public models.

NIH employees have expressed their desire for this type of tooling and technology for their day-to-day work, however, so a team at NHLBI created an internal version in which the tooling can be utilized while sensitive information is protected.

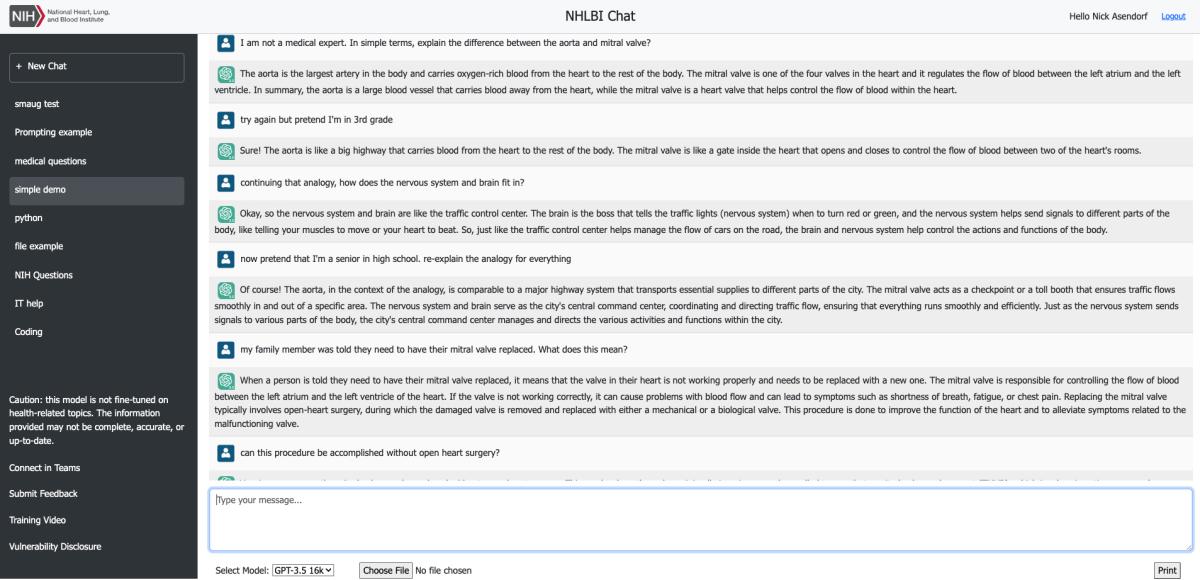

Called NHLBI Chat, this internal-only LLM chatbot tool developed for NHLBI staff emulates the functionality of public models while being customizable for NHLBI users.

“This technology can accelerate how the NIH operates if employees know how to best use it in a safe manner,” said Nick Asendorf, scientific information officer at the NHLBI Office of Scientific Information. Asendorf collaborated on the tool with colleagues Anthony Fletcher and Robyn Wyrick.

According to Asendorf, NHLBI Chat is a “front-end wrapper” that leverages models available in Microsoft’s OpenAI Service. The available models are not trained on NIH, NHLBI, or medically specific data, but the data entered and received by NHLBI employees is kept within NHLBI’s data center. The additional internal functional layer allows employees to enter sensitive research-related information without the data exposure and breaching issues that would come with using the public models.

Using NHLBI Chat can increase work efficiency by taking over tedious work-related tasks, thus giving NHLBI employees more time to focus on research projects, patient care, or administrative or business tasks. For example, you can ask NHLBI Chat to summarize a paper, proofread a grant, draft an email, or write a line of code.

CREDIT: NHLBI

NHLBI Chat was developed by the NHLBI Office of Scientific Information. Functions include the ability to change models between the ChatGPT 3.5 and ChatGPT 4 models.

“I wouldn’t treat patients with it, but there are definitely good uses for this software,” said Fletcher.

Fletcher also pointed out that the program can be especially helpful for fellows who are non-native English speakers because it can help with grammar and word choice on important documents.

The creators of NHLBI Chat are hopeful that all NIH employees will have access to this kind of internal tooling soon. For now, the chatbot is in its pilot phase and has only been released to a testing group at NHLBI. There are still restrictions on patient-related information and other sensitive data that must be worked through, and technical use and cybersecurity training will be necessary, too.

“It once took technological knowledge, infrastructure, and authorization to get to use email the way we do now,” Wyrick explained. “We are at that same point with large language models and other AI interactive tools.”

Fletcher agreed, adding, “This system does hallucinate, so you can ask it a question and it could just flat out lie to you. Like a Google search, you need to have the best judgment about what you are being told.”

The next step is to create a concrete strategy on how this type of technology can be enabled at other ICs across NIH.

“We need a unified approach that is rooted in security, but also in scientific discovery and experimentation,” said Asendorf. “We need to get this type of technology into the hands of everyone so that they can learn best-use cases, pitfalls, and how to integrate this tooling into their daily workflows.”

The trio developed an NHLBI Chat Training Video (NIH-only SharePoint folder) and recommend that interested individuals review the NIH Guidelines to Public Tooling Use webpage.

NIH Artificial Intelligence Symposium 2024

Friday, May 17, Masur Auditorium, Building 10

Share your AI-related research at the upcoming NIH AI Symposium, sponsored by NHLBI, in partnership with FAES. Abstract submissions for poster presentation or a short talk of 12–15 minutes are encouraged. Online abstract submission and event registration is available at this link.

Important dates:

- March 15 - Abstract submission deadline

- April 5 - Abstract notifications

- May 3 – Registration deadline

Questions? Email Ryan O’Neill at oneillrs@nih.gov.

ICYMI: NIH Director Monica Bertagnolli recently published an article titled “Advancing health through artificial intelligence/machine learning: The critical importance of multidisciplinary collaboration."

We asked OpenAI ChatGPT 3.5 to provide a synopsis of the article for you, our readers. The prompt given to the free, public version of ChatGPT was to synthesize the full-text article in 50 words.

“The integration of artificial intelligence/machine learning (AI/ML) in health research presents significant potential, enabling the analysis of complex datasets for predicting outcomes across various biomedical fields. While the prospects are vast, challenges include addressing diverse patient needs, incorporating human judgment in model development, and standardizing clinical data. The multidisciplinary approach, exemplified by initiatives like the National Institutes of Health Multi-Omics for Health and Disease Consortium, emphasizes collaboration in generating and analyzing complex data. The challenge lies in obtaining high-quality, diverse clinical data, with ongoing efforts to standardize electronic health records. AI/ML’s success hinges on interdisciplinary collaboration, considering economic, clinical, and social factors to avoid unintended biases. As AI/ML navigates the complexities of human biology, the focus must remain on meeting patient and societal needs while interpreting results responsibly.”

You may notice the output included an incomplete sentence and was 128 words, not 50. All of us at the Catalyst have a hard time keeping within word count too so we get it; however, we recommend our readers review Bertagnolli’s article in its entirety to get the real scoop.

Meagan Marks is a postbaccalaureate fellow in David Lovinger’s Laboratory for Integrative Neuroscience at NIAAA, where she studies motivation and reward. In her spare time, she enjoys exercising, playing the guitar, and exploring the D.C. area.

The Demystifying Medicine Series will host Holden Thorpe, editor-in-chief of the Science family of journals, and Vardit Ravitsky with the Hastings Center, on May 7, 4–6 p.m., to discuss “Artificial Intelligence in Scientific Publishing.” Catch it on VideoCast.

This page was last updated on Monday, December 2, 2024