The SIG Beat: Artificial Intelligence

NEWS FROM AND ABOUT THE SCIENTIFIC INTEREST GROUPS

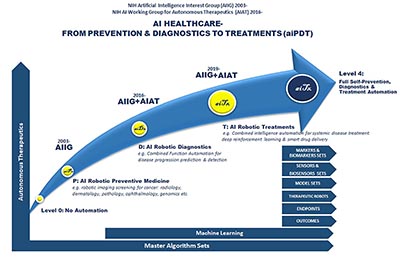

Artificial Intelligence Health Care

From Prevention and Diagnostics to Treatment

CREDIT: JUNE LEE, NICHD

Autonomous vehicles, wearable technology, traffic-aware maps, voice-activated virtual assistants. The opening introductions in a recent artificial-intelligence (AI) workshop mentioned all of these and more, underlining the prevalence of AI in normal life today. In basic biomedical research, AI is being used to interpret neurological images, correlate genetic variants and risk of disease, design and develop drugs, and more. AI’s clinical applications include being used to help diagnose disease and determine treatment options for cancer, assist in robotic surgery, and aid in the processing of electronic health records.

Scientists from academia, industry, and the federal government gathered for the workshop “Artificial Intelligence Healthcare–From Prevention and Diagnostics to Treatment” on October 1, 2019. The introductions by June Lee (chair of the NIH AI Interest Group and of the NIH AI Working Group for Autonomous Therapeutics) and Susan Gregurick (associate director for NIH’s Data Science and director of the Office of Data Science Strategy) prepared the audience for a fascinating view into the present and future of AI, the importance of which in biomedicine is demonstrated by the rapidly growing NIH funding in this area.

The Defense Advanced Research Projects Agency (DARPA) has been investing in AI since 1960, according to DARPA Deputy Director Peter Highnam. AI tools can make opaque decisions for which the rationale is buried within the program. In addition, Highnam stressed the importance of having “explainable AI” in which humans can see and understand the evidence for decisions.

Equally important is “machine common sense”: the very difficult technical problem of getting an AI program to produce the correct answers to questions such as “Can you touch a color?” DARPA-funded research is aiming for automated detection of battlefield injuries; healing of blast and burn injuries using bandages that sense and respond to the severity of the wound; and fully automated pipelines to produce small-molecule drugs in hours rather than weeks. Highnam concluded his talk by showing a video of a wounded veteran with a state-of-the-art prosthetic arm that has tactile sensors and can rotate 360 degrees. The veteran has learned to use brain signals to control the hand, even making it turn “backwards.”

Just as exciting was the second keynote by Richard Satava, professor emeritus of surgery at the University of Washington Medical Center (Seattle) who discussed the current use of AI-based robotic and image-guided surgery. Soon to come, he said, is minimally invasive and remote surgery. The surgeon guiding the robot becomes more of an information manager, responding to the data provided by live imaging during the surgery. Human surgeons, Satava pointed out, can achieve precision of 100 microns (about the size of a grain of salt) during surgery, while a robot achieves precision of 10 microns (approximately the size of a cell). The robot-assisted surgeon could conceivably remove individual cancer cells instead of chunks of tissue, and the patient would recover faster. Satava went on to describe even more futuristic implementations of AI such as virtual autopsies based on 3-D reconstruction of skeletal images, and handheld diagnostic and therapeutic surgical devices that could be operated by the patient themselves.

Diana Bianchi, director of the Eunice Kennedy Shriver National Institute of Child Health and Human Development (NICHD), discussed opportunities for AI in maternal and child health. She described an NICHD-funded consumer device that attaches to a smartphone with AI software. The device can analyze saliva and predict ovulation more accurately and easily than current technology. Other NICHD-funded projects include AI analysis of whole-genome sequences from sick newborns for quicker diagnosis and promising AI-based technologies for detecting jaundice in newborns. Research is ongoing to improve electronic fetal monitoring, predict women’s risk of maternal morbidity, and study placental function. AI analysis of magnetic-resonance images of brains of preterm infants may help predict cognitive deficits and could make it possible to identify disabilities earlier.

On the intramural front, June Lee talked about autonomous therapeutics. For example, the NIH AI Working Group for Autonomous Therapeutics is developing an AI robotic platform that, with little or no human intervention, would diagnose and treat diseases. Francisco Pereira, who leads the Machine Learning Team within the National Institute of Mental Health’s Functional Magnetic Resonance Imaging Core, gave a brief talk on building AI models by combining neural networks developed at many sites (universities and hospitals) into a single, better network, without the private datasets used to train them ever having to be shared.

AI technologies bring their own challenges, of course, and several speakers mentioned the difficulty of obtaining large, well-curated datasets to train the AI software. “Multilingual” researchers who understand both biomedicine and AI technology are crucial for the growth of this field. Bakul Patel, director of FDA’s Division of Digital Health, discussed the unusual challenges of regulating AI-based products. Such challenges include identifying and removing bias in AI software; ensuring that the medical results or predictions of these AI algorithms can be understood and explained by humans; and overseeing AI-based products that learn and change over time as additional data are obtained.

Along with the excitement generated by these revolutionary new technologies, there is considerable concern about the ethical and social implications. Bianchi pointed out that healing often requires “the human touch” and that we should be thinking about maintaining the humanity of health care in the AI environment. Satava brought up some philosophical questions for the more distant future: What makes us human? If human lifespans are extended thanks to AI, can the Earth support a growing and longer-lived population? And how will we occupy ourselves if AI-based machines replace us at work? People also wondered whether we should trust the diagnostic recommendations of an AI program. The consensus of the panelists was that AI output should always be interpreted by a physician.

The workshop also featured about a dozen 10-minute presentations by scientists from NIH, NASA, FDA, Johns Hopkins University (Baltimore), the University of Maryland Baltimore Campus (Baltimore), and Microsoft. In addition, there were two panel discussions with the speakers in which the audience took the opportunity to ask intriguing questions: Can AI help remote or disadvantaged populations? Can it help to reduce health-care costs? Can AI technology being used at one hospital be easily implemented at others?

CREDIT: CHIA-CHI CHARLIE CHANG

NIH AI Workshop Group Photo.

The all-day workshop, which took place on October 1, 2019, was organized by the NIH Artificial Intelligence Interest Group, the Office of Intramural Research, and the NIH AI Working Group for Autonomous Therapeutics. A videocast is available at https://videocast.nih.gov/launch.asp?28761. To read an article on the AI SIG in the November-December 2018 issue of the NIH Catalyst, go to https://irp.nih.gov/catalyst/v26i6/the-sig-beat. For more information about the SIG and instructions for how to join the LISTSERV email list, go to https://oir.nih.gov/sigs/artificial-intelligence-interest-group. You can also contact the chair, June Lee, at LeeJun@mail.nih.gov.

This page was last updated on Tuesday, March 29, 2022