Crowdsourcing

Accelerates the Pace of Toxicity Testing

Scientists at the National Institute for Environmental Health Sciences (NIEHS) have turned to crowdsourcing as a way to help analyze massive datasets and develop new models to predict the toxicity of pharmacological and environmental chemicals.

Crowdsourcing involves using collective intelligence to answer a problem. The idea is that many hands make light work—or, in this case, that many heads make for more efficient problem solving. And what better way to get a scientific crowd together to tackle a problem than to issue a challenge?

It might even be right for your project.

Crowdsourcing initiatives “get the best and the brightest contributing their knowledge to get the best result,” said NIEHS Deputy Director Rick Woychik, who helps steer NIH policy regarding big-data challenges.

In 2013, scientists at NIEHS, the National Center for Advancing Translational Sciences (NCATS), the University of North Carolina (UNC; Chapel Hill, N.C.), and two nonprofits—DREAM (Dialogue for Reverse Engineering Assessments and Methods) and Sage Bionetworks (Seattle, Wash)—partnered to launch a Toxicogenetics Challenge that asked participants to use genetic and cytotoxic data to develop algorithms to predict the toxicity of different chemicals. Analyses such as these are aimed at understanding why different people exposed to the same chemical in the same environment display different reactions.

The Toxicogenetics Challenge was the brainchild of Raymond Tice, chief of the Biomolecular Screening Branch within the National Toxicology Program (an NIEHS-based interagency program dedicated to testing and evaluating substances in the environment), and Allen Dearry, director of NIEH’s Office of Scientific Information Management.

“We’re limited by our own experiences,” said Tice. But “there are other groups out there that will take a novel approach and potentially come up with something really exciting.”

“This partnership and challenge [provide] powerful scientific insights and meaningful public-health impact by accelerating the pace of toxicity testing,” Dearry added.

The three-month competition, which was launched on June 10, 2013, comprised two subchallenges that required participants to develop computer models to predict 1) how an individual’s genetics affect responses to chemical exposures and 2) chemicals’ toxicity to cells based on chemical-structure information.

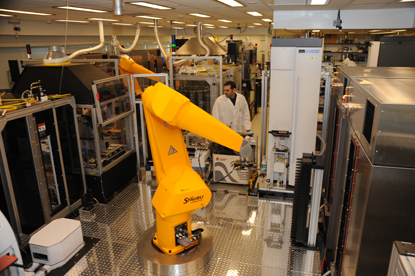

COURTESY OF NCATS

The Toxicogenetics Challenge—hosted by NIEHS, NCATS, UNC, and two nonprofits—dared the computational informatics “crowd” to develop algorithms to predict the toxicity of 156 different chemicals. NCATS’s Chemical Genomics Center used its Tox21 robot system to conduct high-throughput screens as part of the challenge.

The participants were given access to data from one of the largest population-based toxicity studies ever conducted. They tested 884 human lymphoblastoid cell lines (obtained from the NIH-led 1000 Genomes Project) that had been treated with 156 chemicals. The datasets were generated by NIEHS, NCATS, and UNC scientists. NCATS used its robotic equipment, funded by NIEHS, to conduct high-throughput assays that measured the effect of eight different concentrations of adenosine triphosphate (ATP). The lower the ATP concentration, the worse the cell population was growing.

The response from the computational informatics “crowd” was excellent: Thirty-four teams submitted 99 entries to the first subchallenge; 24 teams submitted 85 entries to the second.

“The submission of so many models is a further testimony to the value of the data generated through our collaboration with NIEHS and UNC,” said Anton Simeonov, NCATS’s acting deputy scientific director. The challenge “capitalized on NCATS’s [ability to] test thousands of chemicals, the NIEHS expertise in toxicology, and the UNC expertise in genomics.”

The winning teams for both subchallenges were from the Quantitative Biomedical Research Center at the University of Texas Southwestern (Dallas). They will work with UNC and NIEHS researchers to publish their findings and algorithms in Nature Biotechnology.

Challenges such as the Toxicogenetics Challenge lead to a better understanding of the relationships between chemicals and genes, genes and pathways, and between genes and diseases, said Tice.

This page was last updated on Wednesday, April 27, 2022